Then there are also Sensors, which are nice and shiny ways of waiting for various operations, whether that is waiting on a file to appear, a record in a database to appear, or another task to complete. These will often be Bash, Python, SSH, but can also be even cooler things like Docker, Kubernetes, AWS Batch, AWS ECS, Database Operations, file pushers, and more. Operators are an abstraction on the kind of task you are completing. Your DAG is comprised of Operators and Sensors. If you aren’t familiar with this term it’s really just a way of saying Step3 depends upon Step2 which depends upon Step1, or Step1 -> Step2 -> Step3.Īpache Airflow uses DAGs, which are the bucket you throw you analysis in. There are a ton of great introductory resources out there on Apache Airflow, but I will very briefly go over it here.Īpache Airflow gives you a framework to organize your analyses into DAGs, or Directed Acyclic Graphs. Enter Apache AirflowĪpache Airflow is : Airflow is a platform created by the community to programmatically author, schedule and monitor workflows. If you prefer to watch I have a video where I go through all the steps in this tutorial. These tasks are much easier to accomplish when you have a system or framework that is built for scientific workflows. You need to put your data in a database and set up in depth analysis pipelines.

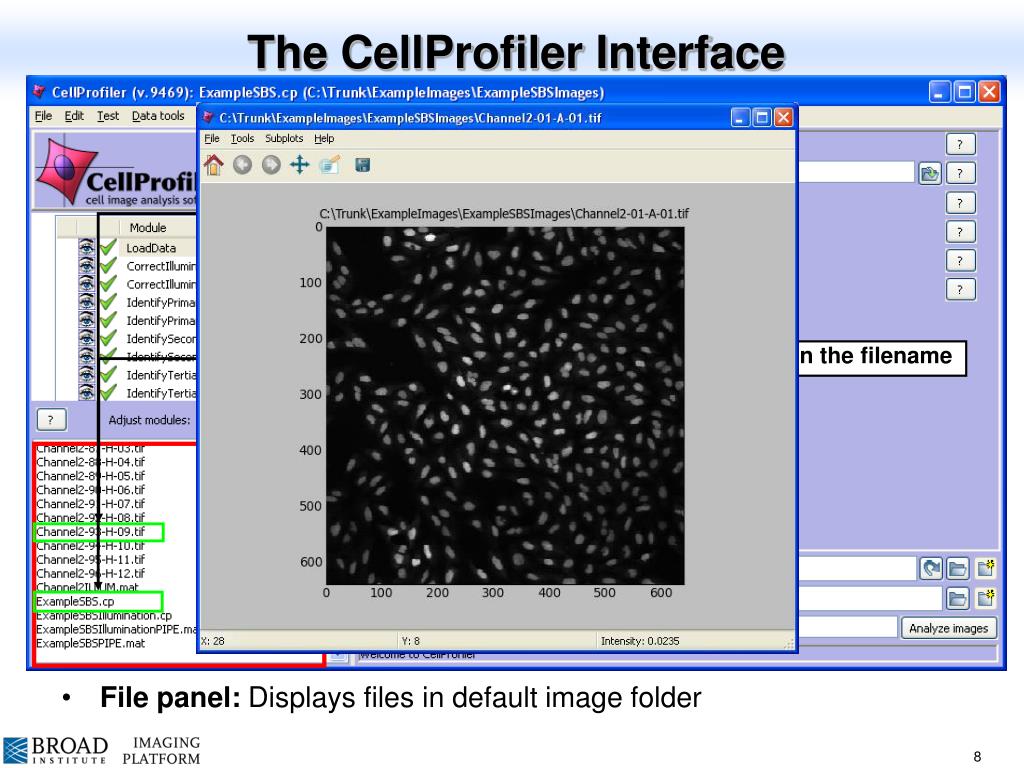

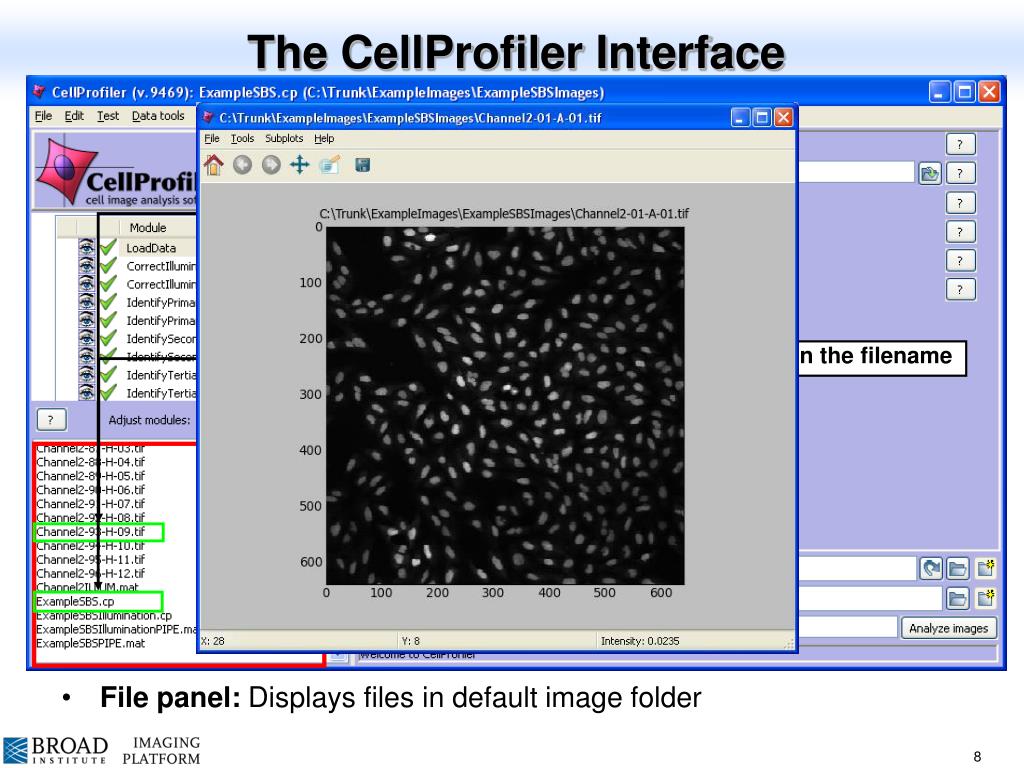

Once you have results you need to decide on a method of organization. If you have a large dataset and you want to get it analyzed sometime this century you need to split your analysis, run, and then gather the results. Keep track of dependencies of CellProfiler Analyses - first run an illumination correction and then your analysis. Trigger CellProfiler Analyses, either from a LIMS system, by watching a filesystem, or some other process. Tiffs.If you are running a High Content Screening Pipeline you probably have a lot of moving pieces. scripts.zip: containing a jupyter notebook to perform image pre-processing. documentation.zip: containing a description of the IMC Segmentation Pipeline. cp4_pipelines.zip: containing the CellProfiler pipelines for analysis. config.zip: containing the antibody panel used for the experiment. The remaining files are part of the root directory: tiff files of all channels for analysis ( _full.tiff) and their channel order ( _full.csv) multi channel images for ilastik pixel classification ( _ilastik.full) and their channel order ( _ilastik.csv) upscaled multi channel images for ilastik pixel prediction ( _ilastik_s2.h5) 3 channel images containing ilastik pixel probabilities ( _ilastik_s2_Probabilities.tiff) and segmentation masks ( _ilastik_s2_Probabilities_mask.tiff). png files per panorama and additional metadata files per slide ilastik.zip: contains upscaled image crops in. tiff files per acquisition for upload to histoCAT ( ) histocat.zip: contains single channel.  cpout.zip: contains all final output files of the pipeline: cell.csv containing the single-cell features Experiment.csv containing CellProfiler metadata Image.csv containing acquisition metadata Object relationships.csv containing an edge list indicating interacting cells panel.csv containing channel information var_cell.csv containing cell feature information var_Image.csv containing acquisition feature information. cpinp.zip: contains input files for the segmentation pipeline.

cpout.zip: contains all final output files of the pipeline: cell.csv containing the single-cell features Experiment.csv containing CellProfiler metadata Image.csv containing acquisition metadata Object relationships.csv containing an edge list indicating interacting cells panel.csv containing channel information var_cell.csv containing cell feature information var_Image.csv containing acquisition feature information. cpinp.zip: contains input files for the segmentation pipeline.

The following files are part of the "analysis" folder when running the IMC Segmentation Pipeline: Please refer to as alternative processing framework and 10.5281/zenodo.6043600 for the data generated by steinbock. This repository hosts the results of processing example imaging mass cytometry (IMC) data hosted at 10.5281/zenodo.5949116 using the IMC Segmentation Pipeline available at.

0 kommentar(er)

0 kommentar(er)